Recent research by Google showed that Artificial Intelligence and Machine Learning are among the hottest fields of Engineering. It’s predicted that the future of robotics will rely on AI and Machine Learning technologies. However, this article is here to highlight how these two technologies can be applied to robotics today.

Artificial Intelligence, Machine Learning and Robotics

What is Artificial Intelligence?

Artificial intelligence (AI) is a field of computer science that involves the study of intelligent agents: any device that perceives its environment and takes actions that maximize its chance of success at some goal. AI systems are used to make complex decisions under uncertain conditions and are capable of learning from experience. Some examples include computer vision, speech recognition, natural language processing (NLP), planning, and decision-making.

AI has been defined as “the study and design of intelligent agents,” where an intelligent agent is a system that perceives its environment and takes actions to achieve goals. Goals can be specific or inferential; for example, the operator may consider their task to be complete when all security threats have been eliminated or until all priority tasks have been completed.

What is Machine Learning?

Machine learning is a form of artificial intelligence (AI) and a subset of data science. In machine learning, computers are “trained” to learn from data; the process can include such techniques as statistical modeling, pattern recognition, and prediction. The name machine learning originated from Arthur Samuel, who came up with it in 1959. Subsequently, other researchers joined in this area and formed a new academic discipline that has grown ever since then.

Machine learning explores the study and construction of algorithms that can learn from data, that is, to increase their accuracy or performance with experience over time without being explicitly programmed (e.g., by a human) to do so. The field was originally termed inductive logic programming (ILP) by Stuart Meehl. But many others preferred intelligent tutoring systems or knowledge-based systems. These systems evolved into modern expert systems. They produce rules tied to predetermined conditions and actions detected at runtime or when actions are triggered externally for execution by sensors monitoring changes in the system state. These events are caused by input causes, such as timer events occurring after a certain time interval has elapsed since the last activation to resume execution of the play mode again. The next cycle/iteration restarts once per second, etc.

The field of machine learning has developed a wide range of highly effective algorithms, often based on statistical analysis and optimization. These algorithms are used for applications:

- Feature extraction (e.g., the automated detection of cancer cells),

- Pattern recognition (e.g., face identification),

- Data mining (e.g., discovering new associations between different variables in large datasets),

- Predictive analytics (e.g., estimating future results based on historical patterns).

How do We Define Robotics?

For those unfamiliar with the term Robotics, it is a branch of engineering that deals with robots. The word robot comes from the Czechoslovakian word “robot,” which means forced labor. Robots are automatons that work according to pre-programmed instructions and can perform tasks automatically without human supervision. They can also be programmed to interact with the environment and make decisions based on their observations.

A robot may be defined as an electromechanical system that carries out certain tasks under the control of a computer program stored in its memory (for example, a personal computer or PLC). The program directs it in carrying out its mission by directing its actions through sensors connected to its sensory input units and actuating devices for manipulating objects in its surroundings through actuators connected to its effector output units (for example, motors).

How ML Works in Robotics?

Machine learning is a subset of AI that uses data to train itself. A machine learning algorithm (MLA) analyzes past experiences and makes predictions based on those experiences. This means that MLAs are used to learn from experience, which allows them to make decisions without being explicitly programmed for them.

MLAs can be used in robotics for any task where the robot needs to learn from its own experience, including:

- Learning about its environment over time so that it can navigate through new spaces more effectively.

- Making predictions about the future state of its environment based on historical records.

- Deciding what action(s) will best achieve a certain goal.

How AI Works in Robotics?

AI is a computer program that can learn, adapt, and improve its performance over time. This is one of the most important concepts in robotics and machine learning.

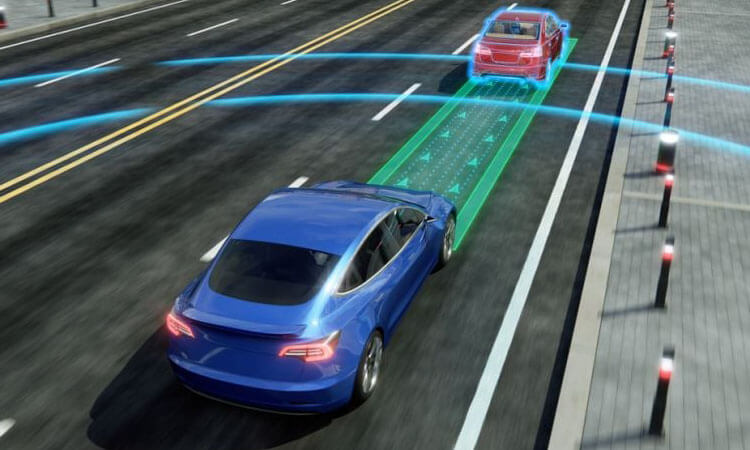

AI is used to power robots. It allows them to perform tasks effectively without being programmed explicitly by humans. For example, if an autonomous vehicle on the road didn’t have AI, it could only drive straight or perform very basic maneuvers such as turning right or left at intersections. However, with AI installed into this vehicle, it would be able to navigate complex environments. Such as buildings and other pathways with ease because it understands where it is going based on the information it receives from its sensors (such as GPS) and cameras mounted on top of the car body itself.

How has Robotic Intelligence Developed Over the Last Few Decades?

Robotic intelligence was developed in the 1950s by Ross Ashby, a British cyberneticist. Before that time, engineers used fixed rules for their machines based on their experience and intuition. Ashby used mathematical models to create an intelligent machine that could learn from its environment and make decisions based on past experiences. This approach led to the development of neural networks and computer simulations of human brain functioning.

The first industrial robot was manufactured by Unimation in 1961. It could perform simple tasks such as loading and unloading boxes on a conveyor belt at a rate of 40 per hour using two vacuum-controlled fingers per hand (the total number of fingers being four). By 1974 over 30 000 robots were working in factories around the world!

Artificial Intelligence and Machine Learning in Robotics

You can think of artificial intelligence and machine learning as technologies that help robots to perform tasks better than they could without the technology. AI and ML in robotics are two sides of the same coin — both are technologies that allow robots to learn from their interactions with the environment around them.

Many people think of AI as a science fiction concept, but it is already used in many aspects of our daily lives. For example, Siri on your iPhone uses speech recognition and natural language processing (NLP) algorithms to understand what you say and respond appropriately. Alexa on Amazon Echos uses NLP to understand users’ questions, such as “What’s the weather?” or “Tell me a joke.” Google Translate uses NLP algorithms again to translate text into another language like German or French.

Current Applications of Machine Learning in Robotics

Imitation Learning

Imitation learning is a type of machine learning approach that enables an agent to learn from the behavior of other agents or humans. It is closely related to observational learning, a behavior exhibited by infants and toddlers. In imitation learning, an agent learns to mimic a teacher’s behavior, who demonstrates the desired behavior. This approach is often used in robotics, where manually programming robotic solutions for mobility outside a factory setting in domains like construction, agriculture, search and rescue, military, and others, can be challenging.

Imitation learning is an umbrella category for reinforcement learning. It involves getting an agent to act in the world to maximize its rewards. Bayesian or probabilistic models are often used in imitation learning, which helps the agent to learn a policy that maps states to actions. The question of whether imitation learning could be used for humanoid-like robots was first postulated in 1999.

Researchers have used imitation learning to develop robots that can perform various tasks, including grasping objects, walking, and navigating off-road terrain. For instance, CMU has applied inverse optimal control methods to develop humanoid robotics, legged locomotion, and off-road rough-terrain mobile navigators. A video published two years ago by researchers from Arizona State University shows a humanoid robot using imitation learning to acquire different grasping techniques.

Bayesian belief networks have also been applied to forward learning models, where a robot learns without prior knowledge of its motor system or the external environment. An example of this is “motor babbling,” demonstrated by the Language Acquisition and Robotics Group at the University of Illinois at Urbana-Champaign (UIUC) with Bert, the “iCub” humanoid robot. Robots can use imitation learning from the actions of humans or other agents. This makes it easier to formulate solutions for complex tasks in various domains.

Computer Vision

Computer vision is a rapidly advancing field that combines computer algorithms and camera hardware to enable robots to process physical data. This technology is essential for robot guidance and automatic inspection systems and has numerous applications, including object identification and sorting. While computer vision, machine vision, and robot vision are often used interchangeably, robot vision encompasses reference frame calibration and a robot’s ability to affect its environment physically.

Recent advances in computer vision have been fueled by the influx of big data, including annotated and labeled photos and videos available on the web. Machine-learning-based structured prediction learning techniques at universities like Carnegie Mellon have been instrumental in developing computer vision applications such as object identification and sorting. One example of a recent breakthrough is using unsupervised learning for anomaly detection, which involves building systems capable of finding and assessing faults in silicon wafers using convolutional neural networks.

The development of extrasensory technologies like radar and ultrasound is driving the creation of 360-degree vision-based systems for autonomous vehicles and drones. Companies like Nvidia are at the forefront of this technology, which is used to improve the accuracy and safety of autonomous vehicles and drones. By combining computer vision with extrasensory technologies, researchers are creating systems that can detect and avoid obstacles, navigate complex environments, and perform various other tasks with unprecedented precision and accuracy.

Assistive and Medical Technologies

Assistive and medical technologies are areas where machine learning-based robotics have made significant advances. These technologies are designed to benefit people with disabilities, seniors, and patients in the medical world.

Assistive robots can sense, sensory process information, and perform actions that are helpful to people. Due to cost constraints, movement therapy robots, which provide diagnostic or therapeutic benefits, are still largely confined to the lab. Examples of early assistive technologies include the DeVAR developed in the early 1990s.

More recent examples of machine learning-based robotic assistive technologies include the MICO robotic arm developed at Northwestern University. This arm uses a Kinect sensor to observe the world and adapt to user needs with partial autonomy, meaning a sharing of control between the robot and human.

Machine learning-based robotics significantly improves surgical precision and reliability in the medical world. The Smart Tissue Autonomous Robot (STAR) is a collaboration between researchers at multiple universities and a network of physicians piloted through the Children’s National Health System in DC. STAR can stitch together “pig intestines” with better precision and reliability than the best human surgeons. While not intended to replace human surgeons, STAR offers significant benefits in performing similar types of delicate surgeries.

Self-Supervised Learning

Self-supervised learning is a powerful machine learning approach that can benefit robots and other devices with limited access to labeled data or the need to generate training examples to improve performance. This approach has been used in various applications, including object detection, scene analysis, and vehicle dynamics modeling.

One fascinating example of self-supervised learning in action is Watch-Bot, a robot developed by researchers at Cornell and Stanford. Using a combination of sensors and probabilistic methods, Watch-Bot can detect normal human activity patterns and use a laser pointer to remind humans of tasks like putting the milk back in the fridge. In initial tests, Watch-Bot successfully reminded humans 60% of the time, and the researchers have continued improving its capabilities through a project called RoboWatch.

Another example of self-supervised learning in robotics is a road detection algorithm developed at MIT for autonomous vehicles and other mobile robots. This algorithm uses a front-view camera and a probabilistic distribution model to identify roads and obstacles on the path.

In addition to self-supervised learning, autonomous learning is another variant of machine learning that can benefit robots and other autonomous devices. One approach, developed by a team at Imperial College London, uses deep learning and unsupervised methods to incorporate model uncertainty into long-term planning and controller learning. This statistical machine-learning approach can reduce the impact of model errors and speed up the learning process, as demonstrated in a manipulator video by the team.

Multi-Agent Learning

Multi-agent learning is a machine learning technique that involves coordination and negotiation among multiple robots or agents to achieve a common goal. Equilibrium strategies are found through learning algorithms that allow the agents to adapt to a changing environment. One example of this approach is using no-regret learning tools, which utilize weighted algorithms to improve multi-agent planning outcomes, and learning in market-based, distributed control systems.

MIT’s Lab for Information and Decision Systems has developed a concrete example of a distributed algorithm for robots to collaborate and build a more inclusive learning model. The robots explore a building and its room layouts, each building its own catalog, which is combined to create a knowledge base. This approach allows robots to process smaller chunks of information and achieve better results when working together than a single robot. While not perfect, this type of machine learning approach enables robots to reinforce mutual observations, compare catalogs or data sets, and correct omissions or over-generalizations.

Multi-agent learning has the potential to be used in several applications, including autonomous land and airborne vehicles. This approach can lead to a more efficient and effective performance by having robots communicate and cooperate to achieve a common goal. However, further research and development are needed to optimize this technique for real-world use.

Applying Machine Learning and Artificial Intelligence to Robotics in Various Industries

Robotics can be applied in various industries, such as agriculture, healthcare, manufacturing, logistics, and so on.

- Agriculture: The use of drones for crop inspection has become very common nowadays. These drones are equipped with sensors that measure different parameters like soil moisture content, fertilizer levels, etc. The data collected from these sensors are sent back to the server, where an algorithm analyzes it and sends suggestions or alerts to farmers through SMS or mobile app notifications based on their crops/land area conditions.

- Healthcare: AI-based robots are being used in hospitals across the globe for various purposes, like assisting doctors during surgical procedures or diagnosing patients by scanning images taken from MRI machines. For example, IBM’s artificial intelligence platform Watson has been successfully deployed at Highland Hospital Partners – Scotland’s largest hospital group. It is based on its ability to read medical records faster than any human doctor can (Watson was able to read through 1 million pages within 10 minutes). Research conducted by Accenture Strategy Limited survey says that the adoption rate for digital transformation initiatives is highest among healthcare providers than any other industry verticals (60%), followed by financial services (52%).

- Manufacturing: The manufacturing sector is one of the most important industries in any country. AI-powered robots are being used to automate manufacturing processes and increase efficiency. For example, General Motors recently launched a new robot called “Baxter,” which will help human workers carry out repetitive tasks like picking up parts from bins or welding them together with minimal supervision.

- Logistics: AI-powered robots are being used to optimize logistics processes and cut costs. For example, DHL has launched a new AI-powered robot called “PakBot.” It will help human workers scan packages, pack items into boxes and label them with barcodes to reduce the amount of time it takes to ship products around the world (this should also ensure that customers receive their orders faster).

- Education: AI-powered robots are being used for a variety of purposes in education. For example, robots are being used to help teachers grade essays and papers faster and more accurately than ever before. They can also be used in classrooms to teach children important life skills like how to cook or clean up after themselves.

Future of AI and Machine Learning in Robotics

As we have seen, AI and ML have been applied to robotics for many years. Technology is constantly evolving, opening the door for new ways of bringing AI and ML into the world of robotics. This means that it’s entirely possible that you will see AI and ML being used in ways you haven’t even thought of yet!

AI and ML can be applied to robotics in many more industries than just manufacturing or healthcare, as well as in ways beyond what we’ve discussed here. For example, researchers are looking into how they might be able to use machine learning algorithms based on deep learning techniques to teach robots new skills faster than ever before. One such study found that computers could learn how to recognize objects around them after watching humans do so only once—something normally takes humans hours or days!

Conclusion

You now clearly understand the role of AI and machine learning in robotics. They are already being used and will be used more in the future. They’re used in all aspects of robotics, even though they aren’t necessary for every part of a robot’s function. And they’ll likely be used more in the future as technology advances further.

Video Source: Official Website of the National Aeronautics and Space Administration

About Machine Learning in Robotics Q&A

-

What are Some Examples of Machine Learning Applications in Robotics?

Examples of machine learning applications in robotics include object recognition, path planning, navigation, control, and perception. It is also used in self-supervised learning, assistive and medical technologies, multi-agent learning, and reinforcement learning.

-

How can Machine Learning Improve the Performance of Robots?

Machine learning can improve the performance of robots by enabling them to learn from data and experience. It can adapt to new situations, and make intelligent decisions based on the information they gather. This can lead to better accuracy, efficiency, and robustness of the robot’s behavior.

-

How is Machine Learning Used in Autonomous Vehicles?

Machine learning is used in autonomous vehicles to enable them to make decisions based on sensory data, plan routes, and navigate through complex environments. It is also used for object detection and recognition, behavior prediction, and decision making.

-

What is Reinforcement Learning in Robotics?

Reinforcement learning in robotics is a type of machine learning technique that involves training robots to learn by trial and error. The robot receives feedback in the form of rewards or penalties based on its actions and learns to optimize its behavior to maximize its reward.

-

What is Self-supervised Learning in Robotics?

Self-supervised learning in robotics is a type of machine learning technique that enables robots to generate their own training data by learning from their environment. This can include using prior knowledge and data captured from sensors to interpret long-range ambiguous sensor data.

-

How is Machine Learning Used in Assistive and Medical Technologies?

Machine learning is used in assistive and medical technologies to improve the performance and reliability of robotic systems for people with disabilities and medical conditions. It is used for movement therapy, autonomous surgery, diagnostic and therapeutic purposes.