Vision sensors are used in various industries, including robotics, automotive manufacturing, and video games. Vision sensors have been developing for decades, and the technology has improved significantly. This article reviews the basics of vision sensors and provides an overview of how they work. We’ll also look at some important technical terms essential to know when working with vision sensors. Finally, we’ll discuss some applications for vision sensors so you can see how they’re being applied in real-life applications today!

Vision Sensor Working Principle

Vision sensors are typically assembled from a camera, display, interface, and computer processor. It primarily uses these components to automate industrial processes and decisions. It records measurements of objects and makes pass/fail decisions. It also supports the inspection of analyzable product quality. A vision sensor with an integrated processor can be called a smart camera. A smart camera is capable of not only image acquisition but also image processing. This camera allows various levels of embedded vision and is a favorite example of a vision sensor for amateur photographers.

Vision sensors use images to determine a particular object’s presence, orientation, and accuracy. It supports image acquisition and image processing. It can also use a single sensor for multi-point detection. The vision sensor is intended for data exchange between the camera and the computer-processed unit. It compares and analyzes the captured image with a reference image stored in memory. Suppose the vision sensor is set up with a setting status of eight bolts inserted into the body. Then it can identify the non-conforming parts quickly during the inspection process. These non-conforming components may be components with only seven bolts or misaligned bolts on the machine. In addition, it can make correct judgments about the position and rotation angle of the machine parts.

There is a clear difference between these sensors and image detection systems. They make the installation and operation of the device extremely easy. They are also different from other general-purpose sensors. A typical example is a single vision sensor that can detect multiple points. It also allows the detection of objects with inconsistent target positions.

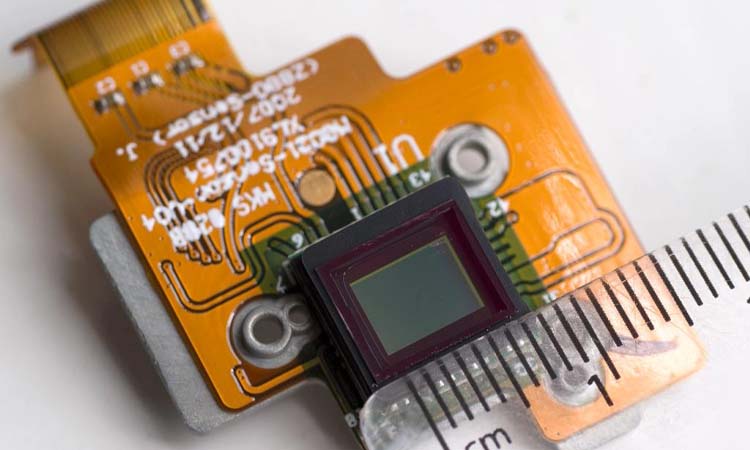

Vision sensors are available in monochrome and color models. The monochromatic model mainly identifies the intensity range between white and black areas. The camera will capture the model image. An optical receiver (CMOS compatible) passes the captured image through the camera. Then it converts it to an electrical signal. It supports the recognition of objects’ shape, orientation, and brightness. The light received by the color model is divided into red, green, and blue. It allows people to identify the color of an object by its intensity difference.

Technical Term Analysis of Vision Sensor

- Pixel: It is the basic photosensitive unit on the photosensitive device. It is that small unit of an image that the camera can recognize. Simply put, it’s a combination of images and elements.

- Grayscale: The intensity of light to which an object is exposed. It classifies the intensity of light to which an object is exposed from black to white. It has a total of 256 gray levels.

- Working Distance: The distance from the lens barrel to the object

- Field of View (FOV): The physical area that can be seen using a camera. Its field of view is calculated from the general values of the light source and active area:

The longitudinal length of camera effective area (V) /optical multiple (M)= field of view (V)

Transverse length of camera effective area (H) /optical multiple (M)= field of view (H)

Vertical length (V) or horizontal length (M) of the camera effective area = size of one pixel of the camera × number of effective pixels (V) or (H) - Depth of Field: The distance between the front and back of a sharp image of an object in focus.

- Focal Length (f): The distance from the center of a lens to its focal point

- Fixed-Focus Lens: The focal length of the lens. One cannot adjust the fixed focus lens. People can’t regulate it.

- Zoom Lens: Adjustable lens focal length.

- Edge Luminance: percentage of central and peripheral illumination

- Modulation Transfer Function (MTF): The intensity change of the object surface. This function represents the imaging performance of the lens and the contrast of the imaged object.

- Binarization: Converts colors of 256th order concentration to black and white. Usually, the binarization process is followed by the measurement of white pixels.

- Shutter: A device used to control the timing of light exposure to a photographic element.

- Aperture: A device that controls the amount of light entering the body through the lens. It is usually located inside the lens. The f-number is often used to indicate the size of the aperture.

- Image Input Cycle: Time to acquire an image.

- Precision: The difference between the measured value and the true value

- Replicate Value: Difference in values from multiple tests

- Resolution: The number of black and white lines visible in the middle of 1 mm. Its unit is (lp)/mm.

- Exposure Time: The process by which light is perceived on the surface of a photosensitive device

- Industrial Cameras-Lens Interface

| Interface Type | C | CS | 4/3口 | F | EF | PK | C/Y |

| Flange Back Focal Length | 17.526 | 12.5 | 38.58 | 46.5 | 44 | 45.5 | 45.5 |

| Bayonet Ring Diameter(mm) | 1(INCH) | 1(INCH) | 46.5 | 47 | 54 | 48.5 | 48 |

Vision Sensors Types

Vision and image sensors can be divided into two categories according to their structure and components. These two categories are charge-coupled devices and complementary metal oxide semiconductors CMOS. Charge-coupled devices use a highly light-sensitive semiconductor material. It converts light into electrical charge and turns it into a digital signal via an analog-to-digital converter chip.

CMOS utilizes semiconductors made of two elements, silicon and germanium. This material allows it to coexist on CMOS with N and P-level semiconductors. The current generated by both can be recorded and interpreted into an image by a processing chip. CCD-type image sensors operate with very low noise. The sensor can also maintain a good condition in dim environmental conditions. The CMOS-type image sensor has better quality than the normal sensor. It can support a low-voltage power supply drive.

Vision System vs Vision Sensor

The terms vision system and vision sensor are often used interchangeably, but there are some subtle differences:

- A vision sensor is a single camera or image sensor that captures visual information. It converts the optic information into digital images that can be processed. Examples include CMOS cameras, CCD cameras, etc.

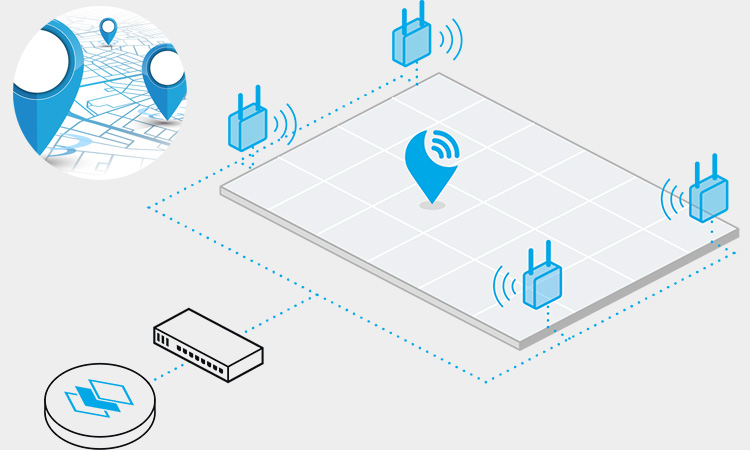

- A vision system incorporates multiple vision sensors and additional components to perceive, analyze, and interpret visual information in a more advanced way. A vision system usually includes:

- Multiple cameras with different lenses, perspectives, fields of view, etc., for stereo vision, panoramic view, etc.

- Image processing hardware/software for tasks like image enhancement, segmentation, object recognition, 3D reconstruction, tracking, etc.

- Lighting equipment to properly illuminate the scene.

- Mechanical components to control camera positioning and focus.

- Computing power to process the visual data in real time.

So, a vision system provides a more holistic solution for machine perception using cameras and associated hardware/software. Vision sensors are key components of a vision system, but a system also includes additional elements.

Vision systems are useful for applications like autonomous vehicles, robotic vision, visual inspection, surveillance, facial recognition, gesture recognition, tracking, and more. Single vision sensors have more limited capabilities for complex machine perception tasks.

In short, a vision system aims to endow machines with visual ” sight, “while a vision sensor aims to capture visual information in the form of digital images. A good vision system relies on high-quality vision sensors but provides much more sophisticated visual functionality.

Vision Sensor Features

Installation

People are prone to damage and loss when using cameras in vision sensors. Hardening of the camera bag and lens helps prevent damage to the camera. The cameras in these vision sensors are permanently installed during the manufacturing process. Permanent installation ensures that the camera can record the correct field of view during use. Most people also choose to use stands, armrests, and shock mounts to place and protect the camera.

Inspection

Vision sensors can be programmed to detect many different features. For example:

- Area sensors can detect some missing features, such as holes, in part being machined. It inspects the blister pack to ensure that each blister pack is filled.

- The defect sensor verifies an item defect. Such as scratches on the surface and foreign matter on the packaging material.

- Match sensor to verify label position. It will compare the produced pattern with the reference pattern. It verifies the position of the label on the package by comparison.

- Classification sensor inspection parts. It uses several modes to inspect and orient parts. We can use it in the operating room to verify that all components of the surgical kit are in place.

Vision Sensor Application

Pixel Counter Sensor

The pixel counter sensor is intended to measure an object using individual pixels having the same gray value in the calculated image. The sensor has a unique approach to determining individual objects’ shape, size, and shading. The sensor determines the grayscale values on its objects by grouping them. Pixel counting sensors are typically used in the following ways:

- Analysis of weld points

- Verification of missing threads in metal parts

- Register mark detection

- Analysis of joint glue quantity

- Contrast detection during assembly

- Verification of the correct shape of injection-molded products

- Calculation of the number of holes in the rotor

Code Reader

Code readers are one of the most popular vision sensor applications. The sensor allows the reading of bar codes and specific two-dimensional codes. These specific two-dimensional codes are two-dimensional codes that the reader can read from left to right. The code reader may recognize and decode the barcode. The reader also supports reading dominoes, sudoku puzzles, and many different font styles. We can use this type of vision sensor in the following ways:

- Reading Product Packaging Labels

- Product Classification

- Color Mark Detection

- Defect Mark Detection

Related Reading:

Profile Sensor

Contour sensors are primarily used to recognize and assign previously defined objects. The sensor analyzes the shape and contour of specific objects and objects in the processing pipeline. It also performs secondary identification of the objects passing through. Profile sensors often check objects’ structure, orientation, position, and integrity. Applications for profile sensors are:

- Quality Control

- Verification of Punch Holes in Steel Bars

- Automotive Wheel Nut Verification

- Determination of Spoon Position in Packaging

- Determination of Other Sensor Positions

- Verification of Proper Alignment of Automotive Parts

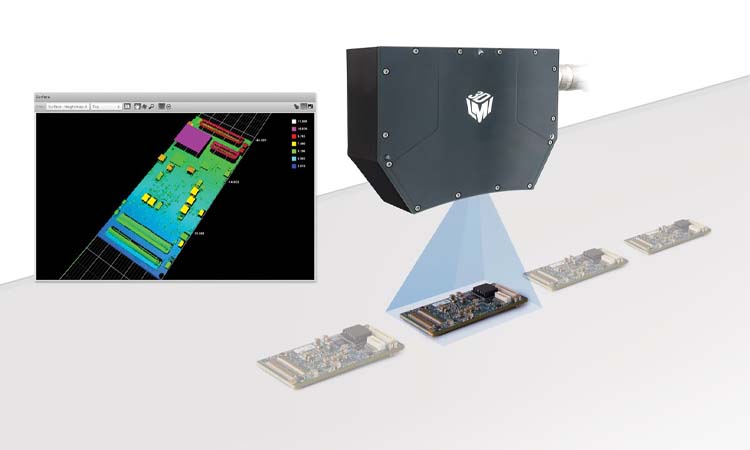

3D Sensor

3D sensors scan the surface and depth of an object. They are commonly used to analyze the presence of an object in a package. They also support the determination of an object by its size. Common applications for these sensors are:

- Measurement of opaque solids and bulk materials in tanks or silos

- Check that a complete quota of bottles is present in the crate.

- Package size and volume calculation in the warehouse and distribution center

How do I Choose the Right Vision Sensor?

As vision sensors become more widely used, our options begin to grow. The eye of a machine vision system is a camera. The heart of the camera is the image sensor. In terms of sensor options, we need to consider relatively more. These include its accuracy, cost of the system, and understanding of the application requirements. Often we can narrow down the search by having a general understanding of the main features of the sensor. We will be able to reduce the time to find the right sensor.

In a given application, we can determine the choice of the sensor by three different elements. These are dynamic range, speed, and responsiveness. Some people say that dynamic range is the ability to detail. This range determines the quality of the image that the system can capture. The speed of a sensor is the number of images that the sensor can produce per second. There is also the output of images that the system can receive. Responsivity is the efficiency with which the sensor converts photons into electrons. Responsivity determines the level of brightness that the system needs to capture a useful image. System developers can study these characteristics on their benchmarks. These characteristics help them to make the right judgments.

Most people use vision sensors in automated processing lines. It helps the company determine the product’s quality within a given batch. It also helps in ensuring the uniformity of the product. Vision sensors can also be used in a wide variety of industries. These industries include food, beverage, injection molding assembly lines, robotics, and general manufacturing.

About Vision Sensors FAQs

-

What are Vision Sensors, and how do they work?

Vision Sensors use cameras and image processing algorithms to capture, analyze, and interpret visual data. They capture images of a physical object and use algorithms to extract useful information.

-

What are the different types of Vision Sensors available?

The different types of Vision Sensors available include 2D and 3D, color, thermal, and infrared sensors.

-

How do Vision Sensors handle image capture and processing?

AVision Sensors handle image capture and processing by using cameras, lenses, and image processing algorithms that extract useful information from the captured images.

-

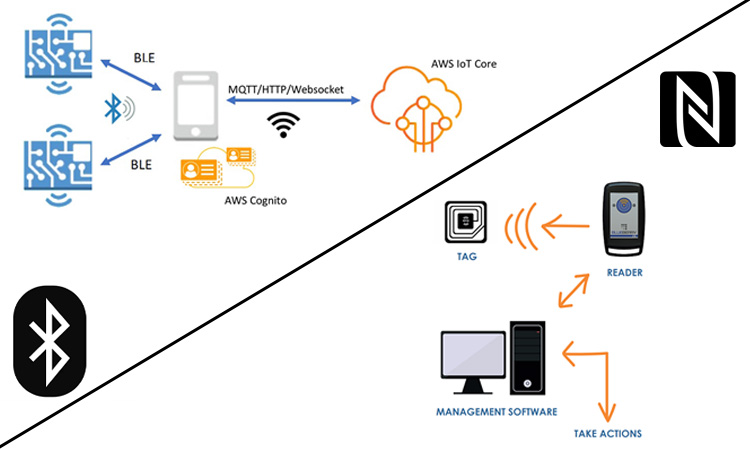

What are the different communication protocols used in Vision Sensor systems?

The different communication protocols used in Vision Sensor systems include Ethernet, RS-232, USB, and wireless protocols.

-

How do Vision Sensors handle power management for long-term operation?

Vision Sensors handle power management for long-term operation using low-power components, energy-efficient designs, and power-saving modes.

-

What are the differences between 2D and 3D Vision Sensors?

2D Vision Sensors capture two-dimensional images, while 3D Vision Sensors capture three-dimensional images, allowing for more accurate depth perception.

-

How do Vision Sensors handle changes in lighting and environmental conditions?

Vision Sensors handle changes in lighting and environmental conditions through adaptive algorithms and lighting compensation techniques.

-

How can Vision Sensors be integrated with other automated systems for maximum efficiency?

Vision Sensors can be integrated with other automated systems using APIs and protocols, allowing data exchange and interoperability.